【RocketMQ】使用Prometheus + AlertManager制作RocketMQ告警

安装AlertManager

下载地址:https://prometheus.io/download/

解压:

tar -zxvf alertmanager-0.23.0.linux.amd64.tar.gz

修改alertmanager.yml配置文件,添加webhook

route:

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: 'xadd-rocketmq'

receivers:

- name: 'xadd-rocketmq'

webhook_configs:

- url: 'http://10.201.243.26:2301/rocketmq/api/alerts/webhook'

启动:

nohup ./alertmanager --config.file=./alertmanager.yml &

访问页面:

默认端口为9093,可以看到配置已加载成功

编写告警规则文件

告警规则不是一成不变的,需要根据实际的业务量、机器的配置以及诸多因素进行调整。

刚开始写的规则,有几个一直报错:vector contains metrics with the same labelset after applying alert labels,原因是表达式查出的结果集里,有一个标签:group和系统自带的重复了,在使用{{$labels.group}}取值的时候就不知道取哪个了,使用label_replace函数替换一下即可,以下是修改后的规则文件:

rockrtmq-rules.yaml

groups:

- name: rocketmq-rules

rules:

- alert: high disk ratio warning

expr: sum(rocketmq_brokeruntime_commitlog_disk_ratio) by (cluster,brokerIP) * 100 > 60

for: 10m

labels:

group: xadd-rocketmq

severity: warning

annotations:

message: 集群:{{$labels.cluster}} 的Broker:{{$labels.brokerIP}}磁盘使用率过高,值为:{{ printf "%.2f" $value }}%,持续时间超过10分钟。

description: commitlog对应磁盘使用率高于60%,并持续10分钟,触发此报警

- alert: high disk ratio critical

expr: sum(rocketmq_brokeruntime_commitlog_disk_ratio) by (cluster,brokerIP) * 100 > 80

for: 5m

labels:

group: xadd-rocketmq

severity: critical

annotations:

message: 集群:{{$labels.cluster}} 的Broker:{{$labels.brokerIP}}磁盘使用率过高,值为:{{ printf "%.2f" $value }}%,持续时间超过5分钟,请注意磁盘使用增长。

description: commitlog对应磁盘使用率高于80%,并持续5分钟,触发此报警

- alert: high disk ratio emergency

expr: sum(rocketmq_brokeruntime_commitlog_disk_ratio) by (cluster,brokerIP) * 100 > 90

for: 3m

labels:

group: xadd-rocketmq

severity: emergency

annotations:

message: 集群:{{$labels.cluster}} 的Broker:{{$labels.brokerIP}}磁盘使用率过高,值为:{{ printf "%.2f" $value }}%,持续时间超过3分钟,请尽快处理!

description: commitlog对应磁盘使用率高于90%,并持续3分钟,触发此报警

- alert: high cpu load warning

expr: (1 - avg(rate(node_cpu_seconds_total{job="node-exporter-mq",mode="idle"}[2m])) by (instance)) * 100 > 60

for: 10m

labels:

group: xadd-rocketmq

severity: warning

annotations:

message: 私有云主机节点:{{$labels.instance}} 的CPU负载过高,值为:{{ printf "%.2f" $value }}%,持续时间超过10分钟。

description: 主机节点CPU使用率高于60%,并持续10分钟,触发此报警

- alert: high cpu load critical

expr: (1 - avg(rate(node_cpu_seconds_total{job="node-exporter-mq",mode="idle"}[2m])) by (instance)) * 100 > 80

for: 5m

labels:

group: xadd-rocketmq

severity: critical

annotations:

message: 私有云主机节点:{{$labels.instance}} 的CPU负载过高,值为:{{ printf "%.2f" $value }}%,持续时间超过5分钟,请注意CPU负载是否持续增长。

description: 主机节点CPU使用率高于80%,并持续5分钟,触发此报警

- alert: high memory used warning

expr: (1 - (node_memory_MemAvailable_bytes{job="node-exporter-mq"} / (node_memory_MemTotal_bytes{job="node-exporter-mq"})))* 100 > 60

for: 10m

labels:

group: xadd-rocketmq

severity: warning

annotations:

message: 私有云主机节点:{{$labels.instance}} 的内存使用率过高,值为:{{ printf "%.2f" $value }}%,持续时间超过5分钟。

description: 主机节点内存使用率超过60%,并持续10分钟,触发此报警

- alert: high memory used critical

expr: (1 - (node_memory_MemAvailable_bytes{job="node-exporter-mq"} / (node_memory_MemTotal_bytes{job="node-exporter-mq"})))* 100 > 80

for: 5m

labels:

group: xadd-rocketmq

severity: critical

annotations:

message: 私有云主机节点:{{$labels.instance}} 的内存使用率过高,值为:{{ printf "%.2f" $value }}%,持续时间超过5分钟,请尽快处理!

description: 主机节点内存使用率超过80%,并持续5分钟,触发此报警

- alert: broker maybe down

expr: rocketmq_brokeruntime_start_accept_sendrequest_time offset 2m unless rocketmq_brokeruntime_start_accept_sendrequest_time

labels:

group: xadd-rocketmq

severity: critical

annotations:

message: 集群:{{$labels.cluster}} 的Broker:{{$labels.brokerIP}} 两分钟内Broker列表不一致,请检查Broker是否正常!

description: 对比两分钟内接收发送请求的broker信息列表,如果两分钟前broker列表数量大于当前,则可能是Broker故障,出发此告警

- alert: message backlog warning

expr: label_replace(sum(rocketmq_producer_offset) by (topic) - on(topic) group_right sum(rocketmq_consumer_offset) by (cluster,group,topic) > 5000, "consumergroup", "$1", "group", "(.*)")

for: 10m

labels:

group: xadd-rocketmq

severity: warning

annotations:

message: 集群:{{$labels.cluster}} 的Topic:{{$labels.topic}}出现消息积压 Group为:{{$labels.consumergroup}},已持续10分钟,积压量为:{{$value}}

description: 消息积压:当消费消费速度过慢,导致队列积压,积压数达到5000并持续10分钟,触发此报警

- alert: message backlog critical

expr: label_replace(sum(rocketmq_producer_offset) by (topic) - on(topic) group_right sum(rocketmq_consumer_offset) by (cluster,group,topic) > 10000, "consumergroup", "$1", "group", "(.*)")

for: 10m

labels:

group: xadd-rocketmq

severity: critical

annotations:

message: 集群:{{$labels.cluster}} 的Topic:{{$labels.topic}}出现消息积压 Group为:{{$labels.consumergroup}},已持续10分钟,积压量为:{{$value}}

description: 消息积压:当消费消费速度过慢,导致队列积压,积压数达到10000并持续10分钟,触发此报警

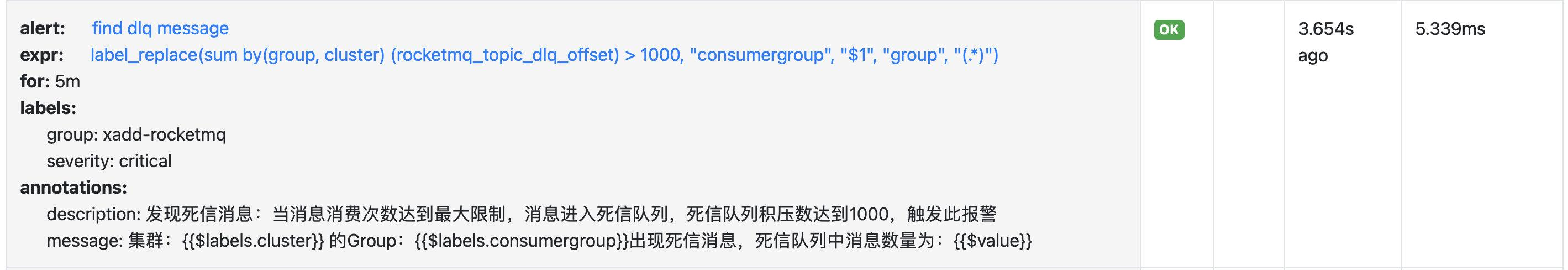

- alert: find dlq message

expr: label_replace(sum(rocketmq_topic_dlq_offset) by (group,cluster) > 1000, "consumergroup", "$1", "group", "(.*)")

for: 5m

labels:

group: xadd-rocketmq

severity: critical

annotations:

message: 集群:{{$labels.cluster}} 的Group:{{$labels.consumergroup}}出现死信消息,死信队列中消息数量为:{{$value}}

description: 发现死信消息:当消息消费次数达到最大限制,消息进入死信队列,死信队列积压数达到1000,触发此报警

- alert: retry message backlog

expr: sum(rocketmq_topic_retry_offset) by (cluster,topic) > 10000

for: 1h

labels:

group: xadd-rocketmq

severity: warning

annotations:

message: 集群:{{$labels.cluster}} 的Topic:{{$labels.topic}}重试队列积压数为:{{$value}},持续时间超过1小时,请检查消费者是否正常

description: 重试队列积压:消息消费失败后,进入重试队列,重试队列积压数达到10000,并持续1小时未消费,触发此报警

- alert: consumer delay

expr: label_replace((sum by(cluster, broker, group, topic) (rocketmq_group_get_latency_by_storetime) / (1000 * 60 * 60) ) > 12, "consumergroup", "$1", "group", "(.*)")

for: 5m

labels:

group: xadd-rocketmq

severity: warning

annotations:

message: 消费者消费延迟超过12小时,持续时间超过5分钟,信息如下:\n 集群:{{$labels.cluster}};\n Topic:{{$labels.topic}};\n Group:{{$labels.consumergroup}};\n 延迟时间:{{ printf "%.2f" $value }} 小时

description: 消息消费延迟:当消息生产后超过12小时还未被消费,持续5分钟,触发此报警

配置Prometheus

这一步主要是关联AlertManager,加载告警规则文件,涉及以下两个配置(我列出的是所有配置):

1、alerting–>alertmanagers

2、rule_files

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- 127.0.0.1:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

- "rules/rockrtmq-rules.yaml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

static_configs:

- targets: ["localhost:9090"]

- job_name: "RocketMQ-dev"

static_configs:

- targets: ["172.20.10.42:5557"]

- job_name: "RocketMQ-test-vm"

static_configs:

- targets: ["172.24.30.192:15557"]

配置完成之后,重启Prometheus。

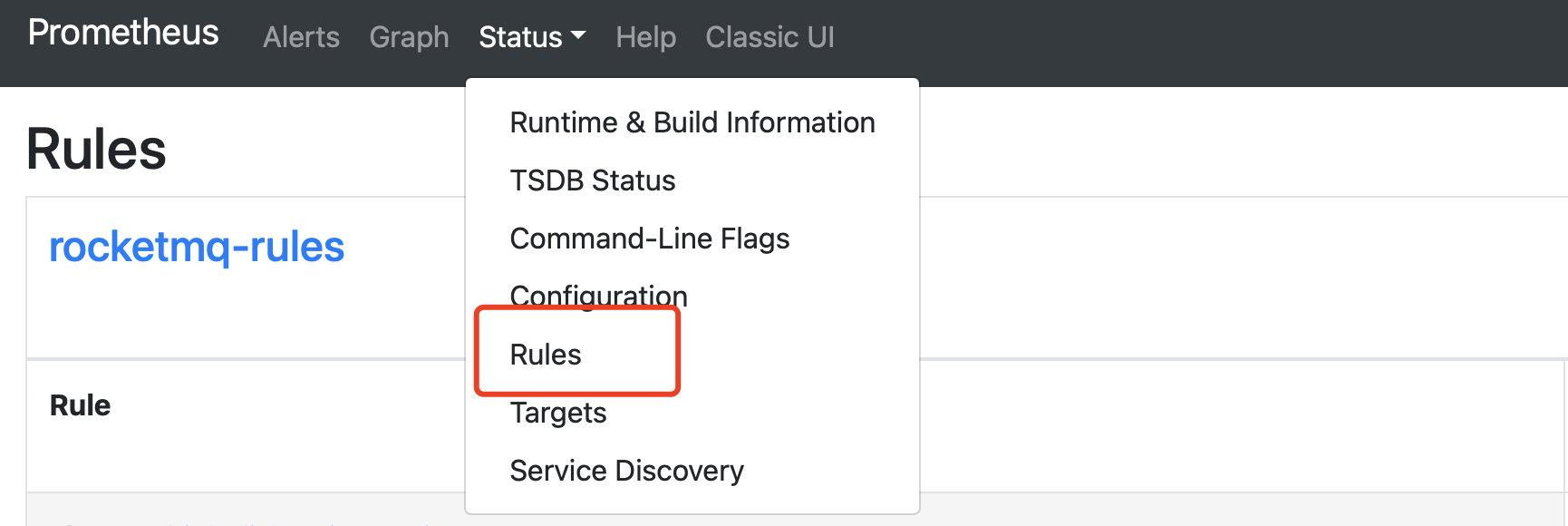

可以在Prometheus页面查看配置的告警规则:

Webhook接口

通过AlertManager发过来的数据其实是一个Map,在Controller中用Map接收就行。

可以解析参数,根据实际的需求,通过程序发送告警信息(邮件、短信、微信、钉钉等)。

/**

* alert manager web hook

*

* @author zhurunhua

* @since 8/30/21 4:30 PM

*/

@RestController

@RequestMapping("/alerts")

@Slf4j

public class AlertController {

@PostMapping("/webhook")

public void list(@RequestBody Map<String, Object> alertInfo) throws Exception {

log.info(JSON.toJSONString(alertInfo));

}

}

收到的参数信息如下:

{

"status":"firing",

"labels":{

"alertname":"high disk ratio",

"brokerIP":"172.24.30.193:10911",

"cluster":"xdf-test1",

"group":"xadd-rocketmq",

"severity":"critical"

},

"annotations":{

"description":"commitlog对应磁盘使用率高于80%,并持续5分钟,触发此报警",

"message":"集群:xdf-test1 的Broker: 172.24.30.193:10911磁盘使用率过高,值为:82.59%,持续时间超过5分钟,请尽快处理!"

},

"startsAt":"2021-09-01T03:38:34.4Z",

"endsAt":"0001-01-01T00:00:00Z",

"generatorURL":"http://iZ2ze434lxf5ptmro9mmprZ:9090/graph?g0.expr=sum+by%28cluster%2C+brokerIP%29+%28rocketmq_brokeruntime_commitlog_disk_ratio%29+%2A+100+%3E+80&g0.tab=1",

"fingerprint":"d6439392acbdfbcb"

}

附:告警规则报错修改前后对比

报错:

修改后: