OSError: We couldn‘t connect to ‘https://huggingface.co‘ to load this file

OSError: We couldn't connect to 'https://huggingface.co' to load this file, couldn't find it in the cached files and it looks like THUDM/chatglm-6b is not the path to a directory containing a file named configuration_chatglm.py.

Checkout your internet connection or see how to run the library in offline mode at 'https://huggingface.co/docs/transformers/installation#offline-mode

因为网络的无法连接到 https://huggingface.co 然后提示我们有离线模式

https://huggingface.co/docs/transformers/installation#offline-mode

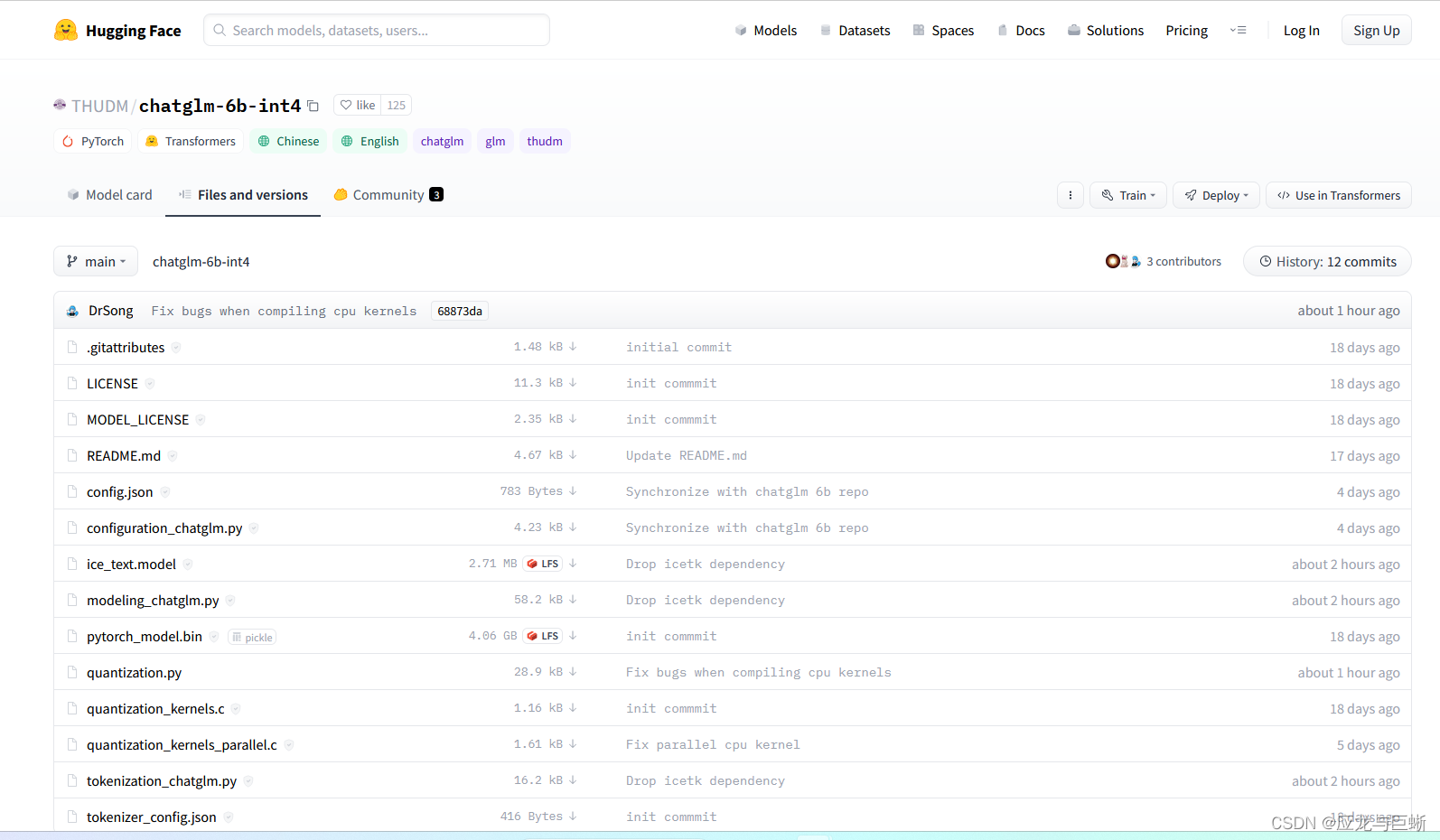

在这找到我们需要的文件并一个一个的下载

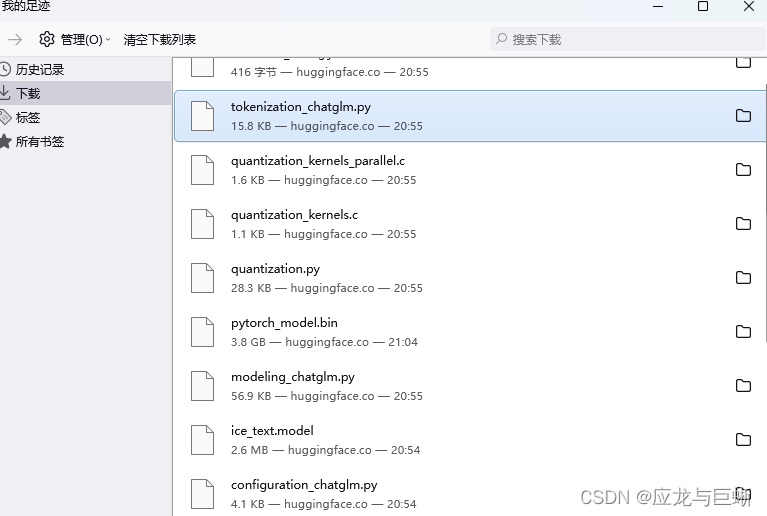

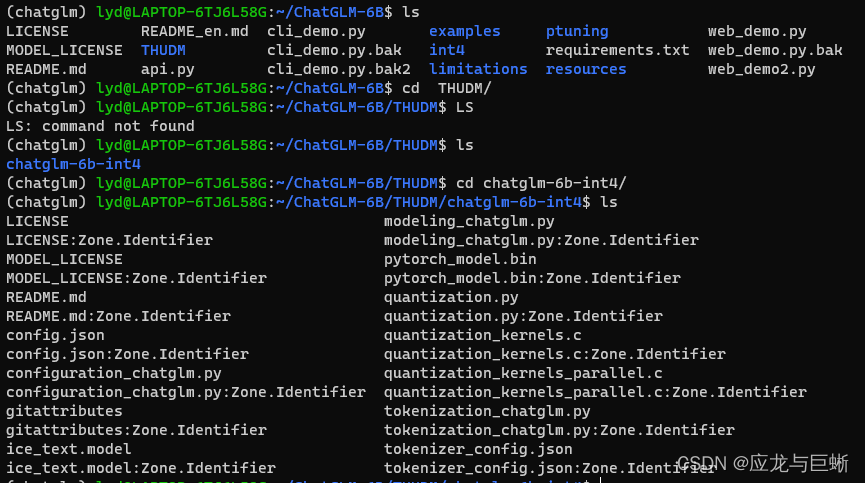

我们下载了这些文件并放到我们指定的文件里。

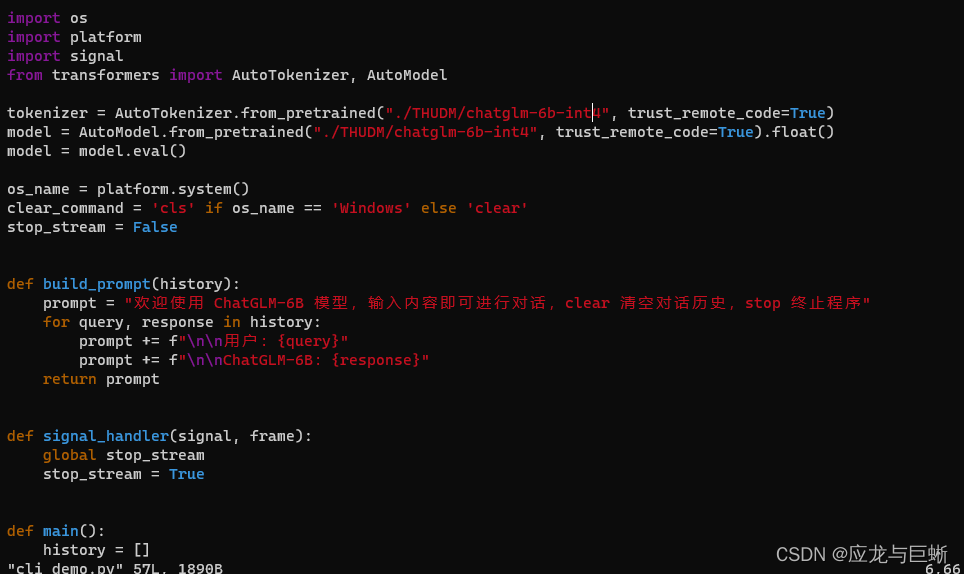

然后修改 cli_demo.py 文件就行了。

这样就从我们本地目录读取 ChatGLM-6B-INT4 是 ChatGLM-6B 量化后的模型权重。