利用python爬虫程序爬取豆瓣影评

获取至少两个页面的豆瓣影评,包括每一条影评的标题、评论,然后将多条影评信息存入CSV文件。

具体代码如下:

#doubanyingping.py

import requests

from bs4 import BeautifulSoup

import re

def getHTMLText(url):

kv={'cookie':'viewed="26987890"; bid=LtZlXs-lxWE; __gads=ID=2f05664627755103-228fcabf2eca0085:T=1625664681:RT=1625664681:S=ALNI_MbErnNq9E-gQKSusKFmubZ33JrR1A; gr_user_id=23ab9cd5-12ea-402d-af1f-f82384037c1a; douban-fav-remind=1; ll="108291"; dbcl2="220543507:+d6RaThYFJg"; push_noty_num=0; push_doumail_num=0; _vwo_uuid_v2=D2BBDC590CDF4A6D94F22488FC0507F3C|f20d9349bb838c0ffda7ad25fbd40373; __utmz=30149280.1631793610.4.3.utmcsr=link.csdn.net|utmccn=(referral)|utmcmd=referral|utmcct=/; __utmz=223695111.1631793610.2.2.utmcsr=link.csdn.net|utmccn=(referral)|utmcmd=referral|utmcct=/; ck=re1Y; __utma=30149280.516384608.1625664683.1632279401.1632391188.7; __utmb=30149280.0.10.1632391188; __utmc=30149280; __utma=223695111.1494505190.1631782526.1632279401.1632391188.5; __utmb=223695111.0.10.1632391188; __utmc=223695111; _pk_ref.100001.4cf6=%5B%22%22%2C%22%22%2C1632391194%2C%22https%3A%2F%2Flink.csdn.net%2F%3Ftarget%3Dhttps%253A%252F%252Fmovie.douban.com%252Ftop250%253Fstart%253D0%2526filter%253D%22%5D; _pk_id.100001.4cf6=f45412f108713ba2.1631782532.5.1632391194.1632279427.; _pk_ses.100001.4cf6=*',

'user-Agent':"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.135 Safari/537.36"}

try:

r = requests.get(url,headers=kv,timeout=30)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def fillReviewList(rlist,html):

soup = BeautifulSoup(html,"html.parser")

for div in soup.find_all('div',{"class":"main-bd"}):

rlist.append([div.find('a',{"href":re.compile("https:")}).text.strip("\n "),\

div.find('div',{"class":"short-content"}).text.strip("影评可能有\

剧透\n \xa0(展开)这篇剧评可能有剧透")])

def main():

rinfo=[]

start_url='https://movie.douban.com/review/best/?start='

for i in range(5):

try:

url = start_url+str(20*i)

html = getHTMLText(url)

fillReviewList(rinfo,html)

except:

continue

fw=open("D:/rinfo3.csv","w",encoding='utf-8-sig')

fw.write(",".join(["title","review"])+"\n")

for r in rinfo:

fw.write(','.join(r)+'\n')

fw.close()

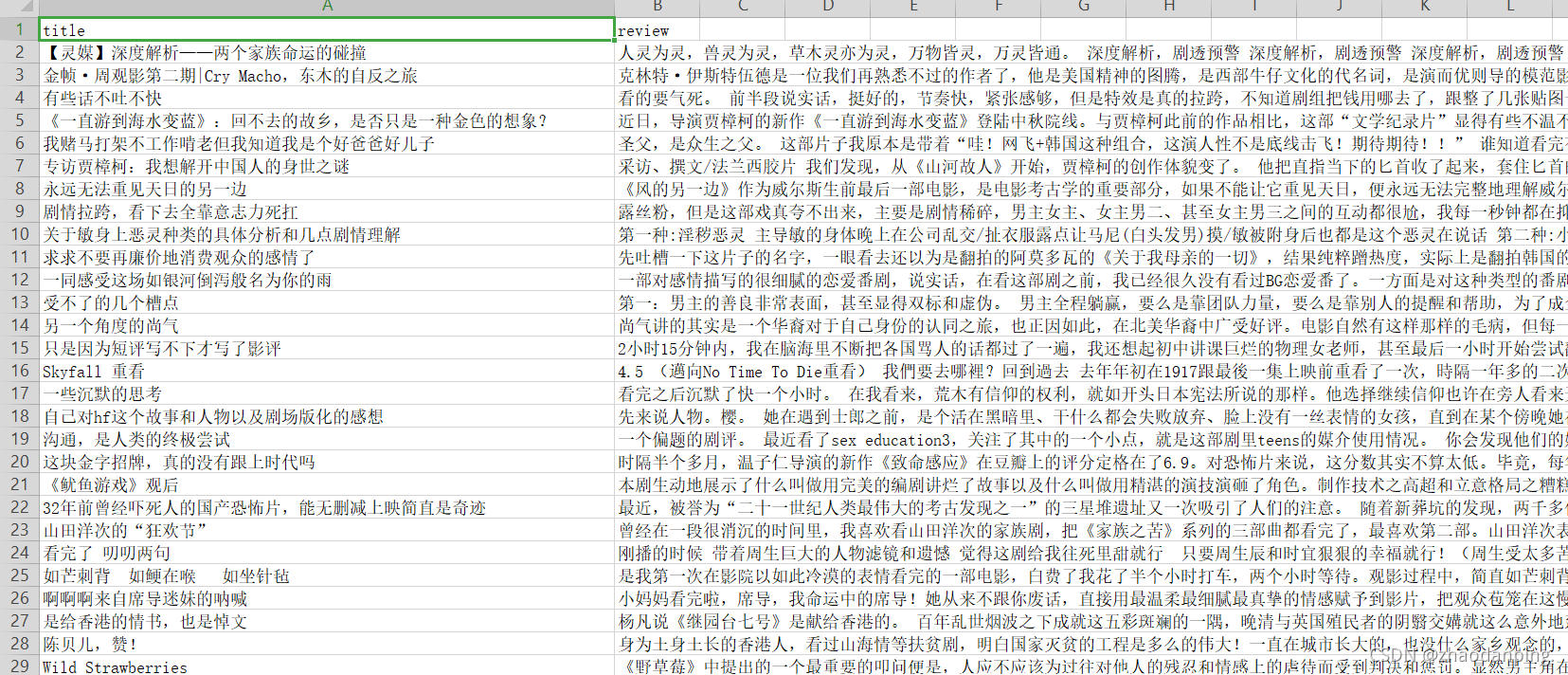

main()运行截图如下: