hive 配置和java链接hive常见的一些问题

1 如何配置hive

使用which hive查找到hive安装路径,hive/conf/路径下的hive-site.xml文件。

以下4项分别配置使用jdbc链接hive底层元数据库的链接字符串、驱动类名、登录元数据库的用户名、登录元数据库的密码

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.76.100:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123</value>

<description>password to use against metastore database</description>

</property>

</configuration>

2 如何要使用java连接hive,需要启动hive的hiveserver2服务。并且进行相关配置,具体如下:

首先在hive-site.xml中找到

<property>

<name>hive.server2.authentication</name>

<value>NONE</value>

</property>

其中value的值默认是NONE,即不需要认证,如果在连接时需要提供认证信息,则需要进行如下配置

首先将value值改为CUSTOM

<property>

<name>hive.server2.authentication</name>

<value>CUSTOM</value>

</property>

配置自定义验证类

<property>

<name>hive.server2.custom.authentication.class</name>

<value>自定义类名如:org.apache.hadoop.hive.contrib.auth.CustomPasswdAuthenticator</value>

<description>

Custom authentication class. Used when property

'hive.server2.authentication' is set to 'CUSTOM'. Provided class

must be a proper implementation of the interface

org.apache.hive.service.auth.PasswdAuthenticationProvider. HiveServer2

will call its Authenticate(user, passed) method to authenticate requests.

The implementation may optionally implement Hadoop's

org.apache.hadoop.conf.Configurable class to grab Hive's Configuration object.

</description> 说明信息,该类必须实现org.apache.hive.service.auth.PasswdAuthenticationProvider接口,hiveserver2利用它的Authenticate(user, passed) 方法进行请求认证

</property>

java中的自定义验证类代码可以写成下面的样子:(-----分割出转自其它博文作者,连接如下https://blog.csdn.net/lr131425/article/details/72628001)

-------------------------------------------------------------------------------------------------------------------

package org.apache.hadoop.hive.contrib.auth;

import javax.security.sasl.AuthenticationException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hive.conf.HiveConf;

import org.slf4j.Logger;

public class CustomPasswdAuthenticator implements org.apache.hive.service.auth.PasswdAuthenticationProvider{

private Logger LOG = org.slf4j.LoggerFactory.getLogger(CustomPasswdAuthenticator.class);

private static final String HIVE_JDBC_PASSWD_AUTH_PREFIX="hive.jdbc_passwd.auth.%s";

private Configuration conf=null;

@Override

public void Authenticate(String userName, String passwd)

throws AuthenticationException {

LOG.info("user: "+userName+" try login.");

String passwdConf = getConf().get(String.format(HIVE_JDBC_PASSWD_AUTH_PREFIX, userName));

if(passwdConf==null){

String message = "user's ACL configration is not found. user:"+userName;

LOG.info(message);

throw new AuthenticationException(message);

}

if(!passwd.equals(passwdConf)){

String message = "user name and password is mismatch. user:"+userName;

throw new AuthenticationException(message);

}

}

public Configuration getConf() {

if(conf==null){

this.conf=new Configuration(new HiveConf());

}

return conf;

}

public void setConf(Configuration conf) {

this.conf=conf;

}

}

此自定义类打成jar包放入到hive/lib目录下。不然会报错找不到class 这里我把class配置的名字故意写错了

2017-05-22T09:42:20,241 ERROR [HiveServer2-Handler-Pool: Thread-41] server.TThreadPoolServer: Error occurred during processing of message.

java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.auth.CustomPasswdAuthenticatve not found

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2195)

at org.apache.hive.service.auth.CustomAuthenticationProviderImpl.<init>(CustomAuthenticationProviderImpl.java:40)

at org.apache.hive.service.auth.AuthenticationProviderFactory.getAuthenticationProvider(AuthenticationProviderFactory.java:70)

at org.apache.hive.service.auth.AuthenticationProviderFactory.getAuthenticationProvider(AuthenticationProviderFactory.java:61)

at org.apache.hive.service.auth.PlainSaslHelper$PlainServerCallbackHandler.handle(PlainSaslHelper.java:106)

at org.apache.hive.service.auth.PlainSaslServer.evaluateResponse(PlainSaslServer.java:103)

at org.apache.thrift.transport.TSaslTransport$SaslParticipant.evaluateChallengeOrResponse(TSaslTransport.java:539)

at org.apache.thrift.transport.TSaslTransport.open(TSaslTransport.java:283)

at org.apache.thrift.transport.TSaslServerTransport.open(TSaslServerTransport.java:41)

at org.apache.thrift.transport.TSaslServerTransport$Factory.getTransport(TSaslServerTransport.java:216)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:269)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.auth.CustomPasswdAuthenticatve not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2101)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2193)

... 13 more

接着在hive-site.xml配置用户名和密码,如果有多个就写多个property

<property>

<name>hive.jdbc_passwd.auth.zhangsan</name>

<value>123456789</value>

<description/>

</property>

接着使用java连接hive,代码如下:

package com.hive;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import org.apache.hive.jdbc.HiveDriver;

public class HiveManage {

private static final String URLHIVE = "jdbc:hive2://192.168.184.130:10000/default";

private static Connection connection = null;

public static Connection getHiveConnection() {

if (null == connection) {

synchronized (HiveManage.class) {

if (null == connection) {

try {

Class.forName("org.apache.hive.jdbc.HiveDriver");

connection = DriverManager.getConnection(URLHIVE, "zhangsan", "123456789");

} catch (SQLException e) {

e.printStackTrace();

} catch (ClassNotFoundException e) {

e.printStackTrace();

}

}

}

}

return connection;

}

public static void main(String args[]) throws SQLException{

// String sql = "select ipaddress,count(ipaddress) as count from apachelog "

// + "group by ipaddress order by count desc";

String sql1="select ipaddress ,t_user,request,agent from apachelog limit 5";

PreparedStatement pstm = getHiveConnection().prepareStatement(sql1);

ResultSet rs= pstm.executeQuery(sql1);

while (rs.next()) {

System.out.println(rs.getString(1)+" "+rs.getString(2)+

" "+rs.getString(3)+" "+rs.getString(4));

}

pstm.close();

rs.close();

}

}

当报如下错误的时候,说明目录没有权限

java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.security.AccessControlException: Permission denied: user=zhangsan, access=EXECUTE, inode="/tmp/hive":root:supergroup:drwx------

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:259)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:205)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1728)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getFileInfo(FSDirStatAndListingOp.java:108)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3857)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1012)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:843)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043)

进入到hadoop/bin目录执行

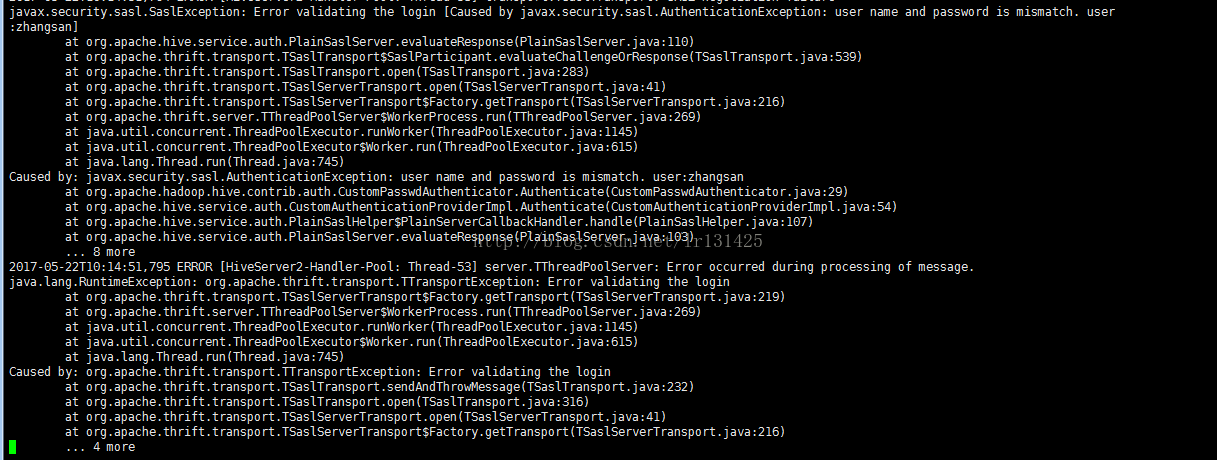

[root@master bin]# ./hdfs dfs -chmod -R 777 /tmp 如果用户名密码错误hive日志显示的就是我们自定义类打印的日志:

---------------------------------------------------------------------------------------------------------------------

到此处均转自其它博文博主

在使用java连接hiveserver2之前,可以使用beeline工具进行连接测试,看hiveserver2是否配置正确,具体方式如下:

在bin/目录下使用 nohup hiveserver2 & 以后台的方式启动hiveserver2,同时会得到一个进程号,之后可以ps 该进程号查看进程状态,也可以ps -ef | grep hiveserver2 查看hiveserver2是否启动。

之后可以启动另一个终端,进入到hive/bin路径,使用beeline -u jdbc:hive2://ip:10000 命令利用beeline工具测试hiveserver2是否正常启动。

如果出现

Could not open connection to jdbc:hive2://localhost:10000: java.net.ConnectException: Connection refused (state=08S01,code=0)错误,则可能是在hive-site.xml中没有正确配置使用 Thrift 方式连接hiveserver2的端口号和host名字,beeline是使用Thrift 方式连接hiveserver2的,所以这项要配置,即beeline -u jdbc:hive2://host:10000 命令中的host项,下面是hive-site.xml中这两项的配置

<!-- hive server2的使用端口,默认就是10000-->

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

<description>Port number of HiveServer2 Thrift interface.

Can be overridden by setting $HIVE_SERVER2_THRIFT_PORT</description>

</property>

<!-- hive server2绑定的主机名-->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop-senior01.atguigu.com</value>

<description>Bind host on which to run the HiveServer2 Thrift interface.

Can be overridden by setting $HIVE_SERVER2_THRIFT_BIND_HOST</description>

</property>