【CASE】芝加哥犯罪率数据集(CatBoostClassifier)

参考:top 2% based on CatBoostClassifier

导入库与数据

import numpy as np

import pandas as pd

pd.set_option("display.max_columns", None)

from sklearn.preprocessing import LabelEncoder, OrdinalEncoder, OneHotEncoder

from sklearn.compose import ColumnTransformer

from sklearn.base import clone

from sklearn.model_selection import StratifiedKFold, train_test_split

from sklearn.metrics import log_loss

from sklearn.decomposition import PCA

from sklearn.mixture import GaussianMixture

import catboost

import gensim

data_train = pd.read_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\kaggle\\sf-crime\\train.csv")

data_test = pd.read_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\kaggle\\sf-crime\\test.csv")

特征处理

def transformTimeDataset(dataset):

dataset['Dates'] = pd.to_datetime(dataset['Dates'])

dataset['Date'] = dataset['Dates'].dt.date

dataset['n_days'] = (dataset['Date']-dataset['Date'].min()).apply(lambda x:x.days)

dataset['Year'] = dataset['Dates'].dt.year

dataset['DayOfWeek'] = dataset['Dates'].dt.dayofweek

dataset['WeekOfYear'] = dataset['Dates'].dt.weekofyear

dataset['Month'] = dataset['Dates'].dt.month

dataset['Hour'] = dataset['Dates'].dt.hour

return dataset

data_train = transformTimeDataset(data_train)

data_test = transformTimeDataset(data_test)

def transformdGeoDataset(dataset):

dataset['Block'] = dataset['Address'].str.contains('block', case=False)

dataset['Block'] = dataset['Block'].map(lambda x: 1 if x == True else 0)

dataset = pd.get_dummies(data=dataset, columns=['PdDistrict'], drop_first=True)

return dataset

data_train = transformdGeoDataset(data_train)

data_test = transformdGeoDataset(data_test)

data_train = data_train.drop(["Descript", "Resolution","Address","Dates","Date"], axis = 1)

data_test = data_test.drop(["Address","Dates","Date"], axis = 1)

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

data_train.Category = le.fit_transform(data_train.Category)

设置特征与目标

X = data_train.drop("Category",axis=1)

y = data_train['Category']

print(X.head())

DayOfWeek X Y n_days Year WeekOfYear Month Hour Block PdDistrict_CENTRAL PdDistrict_INGLESIDE PdDistrict_MISSION PdDistrict_NORTHERN PdDistrict_PARK PdDistrict_RICHMOND PdDistrict_SOUTHERN PdDistrict_TARAVAL PdDistrict_TENDERLOIN

0 2 -122.425892 37.774599 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

1 2 -122.425892 37.774599 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

2 2 -122.424363 37.800414 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

3 2 -122.426995 37.800873 4510 2015 20 5 23 1 0 0 0 1 0 0 0 0 0

4 2 -122.438738 37.771541 4510 2015 20 5 23 1 0 0 0 0 1 0 0 0 0

先把处理的数据封装起来输出(节省时间)

data_train = pd.DataFrame(data_train)

data_train.to_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\Results\\Crimedatatrain.csv")

重新导入

data_train = pd.read_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\Results\\Crimedatatrain.csv")

data_test = pd.read_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\Results\\Crimedatatest.csv")

print(data_train.head())

Category DayOfWeek X Y n_days Year WeekOfYear Month Hour Block PdDistrict_CENTRAL PdDistrict_INGLESIDE PdDistrict_MISSION PdDistrict_NORTHERN PdDistrict_PARK PdDistrict_RICHMOND PdDistrict_SOUTHERN PdDistrict_TARAVAL PdDistrict_TENDERLOIN

0 37 2 -122.425892 37.774599 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

1 21 2 -122.425892 37.774599 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

2 21 2 -122.424363 37.800414 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0

3 16 2 -122.426995 37.800873 4510 2015 20 5 23 1 0 0 0 1 0 0 0 0 0

4 16 2 -122.438738 37.771541 4510 2015 20 5 23 1 0 0 0 0 1 0 0 0 0

修改X,Y的列名

def XYrename(data):

data.rename(columns={'X':'lat', 'Y':'lon'}, inplace = True)

return data

XYrename(data_train)

XYrename(data_test)

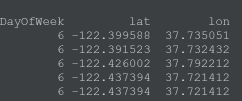

print(data_test.head())

合并X,y,处理地理坐标点

all_data = pd.concat((data_train,data_test),ignore_index=True)

print(all_data['lat'].min(),all_data['lat'].max())

print(all_data['lon'].min(),all_data['lon'].max())

-122.51364209999998 -120.5

37.70787902 90.0

为了改善模型,扩展地理特征

关于PCA降低维度

pca = PCA(n_components=2)

pca.fit(data_train[['lat','lon']])

XYt = pca.transform(data_train[['lat','lon']])

print(XYt)

[[ 0.00345383 0.00340625]

[ 0.00345383 0.00340625]

[ 0.02930857 0.00284016]

...

[ 0.00995497 -0.01886836]

[ 0.01077519 -0.03170572]

[-0.03175461 -0.02889356]]

将主成分分析和高斯混合聚类运用到地理坐标中扩展维度改善模型表现

高斯混淆聚类的一些介绍:

机器学习(17)——GMM算法

高斯混合模型(Gaussian mixture models)

from sklearn.decomposition import PCA

from sklearn.mixture import GaussianMixture

pca = PCA(n_components=2)

pca.fit(data_train[['lat','lon']])

XYt = pca.transform(data_train[['lat','lon']])

data_train['Spatialpca1'] = XYt[:,0]

data_train['Spatialpca2'] = XYt[:,1]

clf = GaussianMixture(n_components=150,covariance_type='diag',random_state=0).fit(data_train[['lat','lon']])

data_train['Spatialpcacluster'] = clf.predict(data_train[['lat','lon']])

print(data_train.Spatialpcacluster.head())

结果:

0 85

1 85

2 8

3 8

4 147

Name: Spatialpcacluster, dtype: int64

DEMO(写一个函数,方便train和test数据集的转换)

from sklearn.decomposition import PCA

from sklearn.mixture import GaussianMixture

def Spatialtransfer(dataset):

lan_median = dataset[dataset['lat']<-120.5]['lat'].median()

lon_median = dataset[dataset['lon']<90]['lon'].median()

dataset.loc[dataset['lat']>=-120.5,'lan']= lan_median

dataset.loc[dataset['lon']>=90,'lon']= lon_median

dataset['lat+lon']=dataset['lat']+dataset['lon']

dataset['lat-lon']=dataset['lat']-dataset['lon']

dataset['Spatial30_1'] = dataset['lat']*np.cos(np.pi/6)+dataset['lon']*np.sin(np.pi/6)

dataset['Spatial30_2'] = dataset['lon']*np.cos(np.pi/6)-dataset['lan']*np.sin(np.pi/6)

dataset['Spatial60_1'] = dataset['lat']*np.cos(np.pi/3)+dataset['lon']*np.sin(np.pi/3)

dataset['Spatial60_2'] = dataset['lon']*np.cos(np.pi/3)-dataset['lan']*np.sin(np.pi/3)

dataset['Spatial1'] = (dataset['lat'] - dataset['lan'].min()) ** 2 + (dataset['lon'] - dataset['lon'].min()) ** 2

dataset['Spatial2'] = (dataset['lat'].max() - dataset['lan']) ** 2 + (dataset['lon'] - dataset['lon'].min()) ** 2

dataset['Spatial3'] = (dataset['lat'] - dataset['lan'].min()) ** 2 + (dataset['lon'].max() - dataset['lon']) ** 2

dataset['Spatial4'] = (dataset['lat'].max() - dataset['lan']) ** 2 + (dataset['lon'].max() - dataset['lon']) ** 2

dataset['Spatial5'] = (dataset['lat'] - lan_median) ** 2 + (dataset['lon'] - lon_median) ** 2

pca = PCA(n_components=2)

pca.fit(dataset[['lat','lon']])

XYt = pca.transform(dataset[['lat','lon']])

dataset['Spatialpca1'] = XYt[:,0]

dataset['Spatialpca2'] = XYt[:,1]

clf = GaussianMixture(n_components=150,covariance_type='diag',random_state=0).fit(dataset[['lat','lon']])

dataset['Spatialpcacluster'] = clf.predict(dataset[['lat','lon']])

return dataset

Spatialtransfer(data_train)

print(data_train.head())

成功输出:

Category DayOfWeek lat lon n_days Year WeekOfYear Month Hour Block PdDistrict_CENTRAL PdDistrict_INGLESIDE PdDistrict_MISSION PdDistrict_NORTHERN PdDistrict_PARK PdDistrict_RICHMOND PdDistrict_SOUTHERN PdDistrict_TARAVAL PdDistrict_TENDERLOIN lan lat+lon lat-lon Spatial30_1 Spatial30_2 Spatial60_1 Spatial60_2 Spatial1 Spatial2 Spatial3 Spatial4 Spatial5 Spatialpca1 Spatialpca2 Spatialpcacluster

0 37 2 -122.425892 37.774599 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0 NaN -84.651293 -160.200490 -87.136633 NaN -28.499184 NaN 0.004541 NaN 0.002149 NaN 0.000090 -0.001242 -0.008148 83

1 21 2 -122.425892 37.774599 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0 NaN -84.651293 -160.200490 -87.136633 NaN -28.499184 NaN 0.004541 NaN 0.002149 NaN 0.000090 -0.001242 -0.008148 83

2 21 2 -122.424363 37.800414 4510 2015 20 5 23 0 0 0 0 1 0 0 0 0 0 NaN -84.623949 -160.224777 -87.122401 NaN -28.476062 NaN 0.008626 NaN 0.000446 NaN 0.000688 0.006807 -0.032724 101

3 16 2 -122.426995 37.800873 4510 2015 20 5 23 1 0 0 0 1 0 0 0 0 0 NaN -84.626123 -160.227868 -87.124452 NaN -28.476982 NaN 0.008760 NaN 0.000477 NaN 0.000760 0.004378 -0.033838 101

4 16 2 -122.438738 37.771541 4510 2015 20 5 23 1 0 0 0 0 1 0 0 0 0 NaN -84.667196 -160.210279 -87.149287 NaN -28.508255 NaN 0.004551 NaN 0.002844 NaN 0.000513 -0.014443 -0.008461 88

还有些小错误(没有把‘lan’改成’lat’导致的空值,下午改。函数没问题的)

pycharm的查找与替换:Ctrl + R 替换

PyCharm中批量查找及替换

修改后:

from sklearn.decomposition import PCA

from sklearn.mixture import GaussianMixture

def Spatialtransfer(dataset):

lat_median = dataset[dataset['lat']<-120.5]['lat'].median()

lon_median = dataset[dataset['lon']<90]['lon'].median()

dataset.loc[dataset['lat']>=-120.5,'lat']= lat_median

dataset.loc[dataset['lon']>=90,'lon']= lon_median

dataset['lat+lon']=dataset['lat']+dataset['lon']

dataset['lat-lon']=dataset['lat']-dataset['lon']

dataset['Spatial30_1'] = dataset['lat']*np.cos(np.pi/6)+dataset['lon']*np.sin(np.pi/6)

dataset['Spatial30_2'] = dataset['lon']*np.cos(np.pi/6)-dataset['lat']*np.sin(np.pi/6)

dataset['Spatial60_1'] = dataset['lat']*np.cos(np.pi/3)+dataset['lon']*np.sin(np.pi/3)

dataset['Spatial60_2'] = dataset['lon']*np.cos(np.pi/3)-dataset['lat']*np.sin(np.pi/3)

dataset['Spatial1'] = (dataset['lat'] - dataset['lat'].min()) ** 2 + (dataset['lon'] - dataset['lon'].min()) ** 2

dataset['Spatial2'] = (dataset['lat'].max() - dataset['lat']) ** 2 + (dataset['lon'] - dataset['lon'].min()) ** 2

dataset['Spatial3'] = (dataset['lat'] - dataset['lat'].min()) ** 2 + (dataset['lon'].max() - dataset['lon']) ** 2

dataset['Spatial4'] = (dataset['lat'].max() - dataset['lat']) ** 2 + (dataset['lon'].max() - dataset['lon']) ** 2

dataset['Spatial5'] = (dataset['lat'] - lat_median) ** 2 + (dataset['lon'] - lon_median) ** 2

pca = PCA(n_components=2)

pca.fit(dataset[['lat','lon']])

XYt = pca.transform(dataset[['lat','lon']])

dataset['Spatialpca1'] = XYt[:,0]

dataset['Spatialpca2'] = XYt[:,1]

clf = GaussianMixture(n_components=150,covariance_type='diag',random_state=0).fit(dataset[['lat','lon']])

dataset['Spatialpcacluster'] = clf.predict(dataset[['lat','lon']])

return dataset

Spatialtransfer(data_train)

print(data_train.head())

封装数据处理的结果并输出

data_train.to_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\Results\\Crimedatatrain2.csv")

data_test.to_csv("C:\\Users\\Nihil\\Documents\\pythonlearn\\data\\Results\\Crimedatatest2.csv")

查看一下数据类型

RangeIndex: 878049 entries, 0 to 878048

Data columns (total 33 columns):

Category 878049 non-null int64

DayOfWeek 878049 non-null int64

lat 878049 non-null float64

lon 878049 non-null float64

n_days 878049 non-null int64

Year 878049 non-null int64

WeekOfYear 878049 non-null int64

Month 878049 non-null int64

Hour 878049 non-null int64

Block 878049 non-null int64

PdDistrict_CENTRAL 878049 non-null int64

PdDistrict_INGLESIDE 878049 non-null int64

PdDistrict_MISSION 878049 non-null int64

PdDistrict_NORTHERN 878049 non-null int64

PdDistrict_PARK 878049 non-null int64

PdDistrict_RICHMOND 878049 non-null int64

PdDistrict_SOUTHERN 878049 non-null int64

PdDistrict_TARAVAL 878049 non-null int64

PdDistrict_TENDERLOIN 878049 non-null int64

lat+lon 878049 non-null float64

lat-lon 878049 non-null float64

Spatial30_1 878049 non-null float64

Spatial30_2 878049 non-null float64

Spatial60_1 878049 non-null float64

Spatial60_2 878049 non-null float64

Spatial1 878049 non-null float64

Spatial2 878049 non-null float64

Spatial3 878049 non-null float64

Spatial4 878049 non-null float64

Spatial5 878049 non-null float64

Spatialpca1 878049 non-null float64

Spatialpca2 878049 non-null float64

Spatialpcacluster 878049 non-null int64

dtypes: float64(15), int64(18)

memory usage: 221.1 MB

用CatBoostClassfier

X = data_train.drop(['Category'], axis=1)

y = data_train.Category

categorical_features_indices = np.where(X.dtypes != np.float)[0]

from sklearn.model_selection import train_test_split

X_train,X_validation,y_train,y_validation = train_test_split(X,y,random_state=42,train_size=0.3)

from catboost import CatBoostClassifier

from catboost import Pool

from catboost import cv

from sklearn.metrics import accuracy_score

model = CatBoostClassifier(eval_metric='Accuracy',use_best_model=True,random_seed=42)

model.fit(X_train,y_train,cat_features=categorical_features_indices,eval_set=(X_validation,y_validation))

算得太慢了就先不算完了。