Flink-CDC 基础应用

文章目录

一、CDC 简介

1. 什么是CDC

CDC 是 Change Data Capture(变更数据获取)的简称。核心思想是,监测并捕获数据库

的变动(包括数据或数据表的插入、更新以及删除等),将这些变更按发生的顺序完整记录

下来,写入到消息中间件中以供其他服务进行订阅及消费。

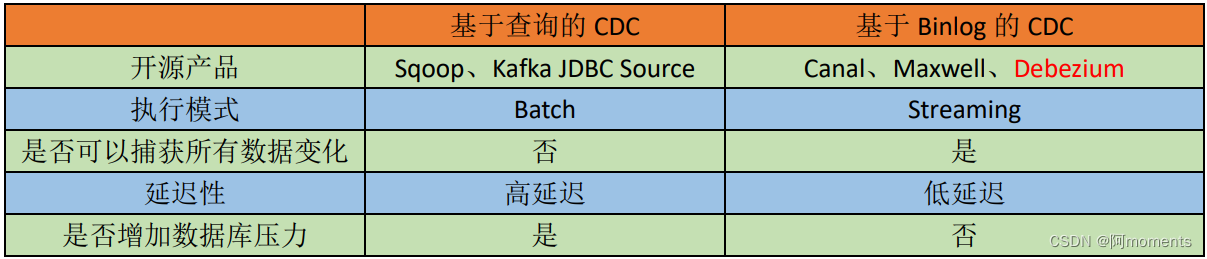

2. CDC的种类

CDC 主要分为基于查询和基于 Binlog 两种方式:

3. Flink-CDC

Flink 社区开发了 flink-cdc-connectors 组件(基于Debezium写的),这是一个可以直接从 MySQL、PostgreSQL等数据库直接读取全量数据和增量变更数据的 source 组件。

目前也已开源,开源地址:https://github.com/ververica/flink-cdc-connectors

项目中为什么不适用Canal?FlinkCDC的好处在哪?

如果使用Canal,那么他的数据流程可能是:MySQL —> Canal —> Kafka —>Flink;

而使用FlinkCDC的话可以是:MySQL —>FlinkCDC —>Flink;

省去了消息中间件,流程更加高效了(分层需要的话,会在FlinkCDC之后加上Kafka)

二、Flink CDC 案例实操

1. DataStream 方式的应用

1.1 导入依赖

<properties>

<flink-version>1.13.0</flink-version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.49</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.12</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.75</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

1.2 编写代码

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkCDC {

public static void main(String[] args) throws Exception {

//1.获取Flink 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.Flink-CDC 将读取 binlog 的位置信息以状态的方式保存在 CK,

// 如果想要做到断点续传,需要从 Checkpoint 或者 Savepoint 启动程序

//2.1 开启 Checkpoint,每隔 5 秒钟做一次 CK

env.enableCheckpointing(5000L);

//2.2 指定 CK 的一致性语义

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//2.3 设置任务关闭的时候保留最后一次 CK 数据

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckp

ointCleanup.RETAIN_ON_CANCELLATION);

//2.4 指定从 CK 自动重启策略

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 2000L));

//2.5 设置状态后端

env.setStateBackend(new FsStateBackend("hdfs://hadoop102:8020/flinkCDC"));

//2.6 设置访问 HDFS 的用户名

System.setProperty("HADOOP_USER_NAME", "atguigu");

//3.通过FlinkCDC构建SourceFunction

DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder()

.hostname("hadoop102")

.port(3306)

.username("root")

.password("000000")

.databaseList("cdc_test")

//可选配置项,如果不指定该参数,则会读取上一个配置下的所有表的数据,

//注意:指定的时候需要使用"db.table"的方式

.tableList("cdc_test.user_info")

.deserializer(new StringDebeziumDeserializationSchema())

.startupOptions(StartupOptions.initial())

.build();

//4.使用 CDC Source 从 MySQL 读取数据

DataStreamSource<String> dataStreamSource = env.addSource(sourceFunction);

//5.数据打印

dataStreamSource.print();

//6.启动任务

env.execute("FlinkCDC");

}

}

1.3 案例测试

(1)打包并上传至 Linux

(2)开启 MySQL Binlog 并重启 MySQL

(3)启动 Flink 集群

[atguigu@hadoop102 flink-standalone]$ bin/start-cluster.sh

(4)启动 HDFS 集群

[atguigu@hadoop102 flink-standalone]$ start-dfs.sh

(5)启动程序

[atguigu@hadoop102 flink-standalone]$ bin/flink run -c com.atguigu.FlinkCDC flink-1.0-

SNAPSHOT-jar-with-dependencies.jar

(6)在 MySQL 的 gmall-flink.z_user_info 表中添加、修改或者删除数据

(7)给当前的 Flink 程序创建 Savepoint

[atguigu@hadoop102 flink-standalone]$ bin/flink savepoint JobId

hdfs://hadoop102:8020/flink/save

(8)关闭程序以后从 Savepoint 重启程序

[atguigu@hadoop102 flink-standalone]$ bin/flink run -s hdfs://hadoop102:8020/flink/save/... -c

com.atguigu.FlinkCDC flink-1.0-SNAPSHOT-jar-with-dependencies.jar

2. FlinkSQL 方式的应用

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class FlinkSQLCDC {

public static void main(String[] args) throws Exception {

//1.获取执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//2.使用FLINKSQL DDL模式构建CDC 表

tableEnv.executeSql("CREATE TABLE user_info ( " +

" id STRING primary key, " +

" name STRING, " +

" sex STRING " +

") WITH ( " +

" 'connector' = 'mysql-cdc', " +

" 'scan.startup.mode' = 'latest-offset', " +

" 'hostname' = 'hadoop102', " +

" 'port' = '3306', " +

" 'username' = 'root', " +

" 'password' = '000000', " +

" 'database-name' = 'cdc_test', " +

" 'table-name' = 'user_info' " +

")");

//3.查询数据并转换为流输出

Table table = tableEnv.sqlQuery("select * from user_info");

DataStream<Tuple2<Boolean, Row>> retractStream = tableEnv.toRetractStream(table, Row.class);

retractStream.print();

//4.启动

env.execute("FlinkSQLCDC");

}

}

3. 自定义反序列化器

import com.atguigu.func.CustomerDeserializationSchema;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkCDC2 {

public static void main(String[] args) throws Exception {

//1.获取Flink 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//1.1 开启CK

// env.enableCheckpointing(5000);

// env.getCheckpointConfig().setCheckpointTimeout(10000);

// env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

// env.getCheckpointConfig().setMaxConcurrentCheckpoints(1);

//

// env.setStateBackend(new FsStateBackend("hdfs://hadoop102:8020/cdc-test/ck"));

//2.通过FlinkCDC构建SourceFunction

DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder()

.hostname("hadoop102")

.port(3306)

.username("root")

.password("000000")

.databaseList("cdc_test")

.tableList("cdc_test.user_info")

//引用反序列化器

.deserializer(new CustomerDeserializationSchema())

.startupOptions(StartupOptions.initial())

.build();

DataStreamSource<String> dataStreamSource = env.addSource(sourceFunction);

//3.数据打印

dataStreamSource.print();

//4.启动任务

env.execute("FlinkCDC");

}

}

反序列化器实现逻辑

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Schema;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

import java.util.List;

public class CustomerDeserializationSchema implements DebeziumDeserializationSchema<String> {

/**

* {

* "db":"",

* "tableName":"",

* "before":{"id":"1001","name":""...},

* "after":{"id":"1001","name":""...},

* "op":""

* }

*/

@Override

public void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception {

//创建JSON对象用于封装结果数据

JSONObject result = new JSONObject();

//获取库名&表名

String topic = sourceRecord.topic();

String[] fields = topic.split("\\.");

result.put("db", fields[1]);

result.put("tableName", fields[2]);

//获取before数据

Struct value = (Struct) sourceRecord.value();

Struct before = value.getStruct("before");

JSONObject beforeJson = new JSONObject();

if (before != null) {

//获取列信息

Schema schema = before.schema();

List<Field> fieldList = schema.fields();

for (Field field : fieldList) {

beforeJson.put(field.name(), before.get(field));

}

}

result.put("before", beforeJson);

//获取after数据

Struct after = value.getStruct("after");

JSONObject afterJson = new JSONObject();

if (after != null) {

//获取列信息

Schema schema = after.schema();

List<Field> fieldList = schema.fields();

for (Field field : fieldList) {

afterJson.put(field.name(), after.get(field));

}

}

result.put("after", afterJson);

//获取操作类型

Envelope.Operation operation = Envelope.operationFor(sourceRecord);

result.put("op", operation);

//输出数据

collector.collect(result.toJSONString());

}

@Override

public TypeInformation<String> getProducedType() {

return BasicTypeInfo.STRING_TYPE_INFO;

}

}