oceancolor数据批量下载

引言:苦于下载数据之苦,折腾了一天,做个笔记(钻研不够,只写出了我解决的部分)

oceancolor官网

oceancolor提供了三种方法批量下载,分别是:

1.wget,

2.cURL,

3.浏览器附件(DownThemAll)

接下来详述我所采用的一些批量下载的方法

浏览器附件法

采用的附件是Firefox> DownThemAll

oceancolor上是这样描述的:

If you prefer a GUI based option, there is an add-on for the Firefox web browser called ‘DownThemAll’. It is easy to configure to download only what you want from the page (even has a default for archived products -gz, tar, bz2, etc.). It allows putting a limit concurrent downloads, which is important for downloading from our servers as we limit connections to one concurrent per file and 3 files per IP - so don’t try the “accelerate” features as you’re IP may get blocked.

Recommended preference settings:

1) Set the concurrent downloads to 1.

2) There is an option under the ‘Advanced’ tab called ‘Multipart download’. Set the ‘Max. number of segments per download’ to 1.

3) Since this download manager does not efficiently close connection states, you may find that file downloads will time out. You may want to set the Auto Retry setting to retry each (1) minute with Max. Retries set to 10.

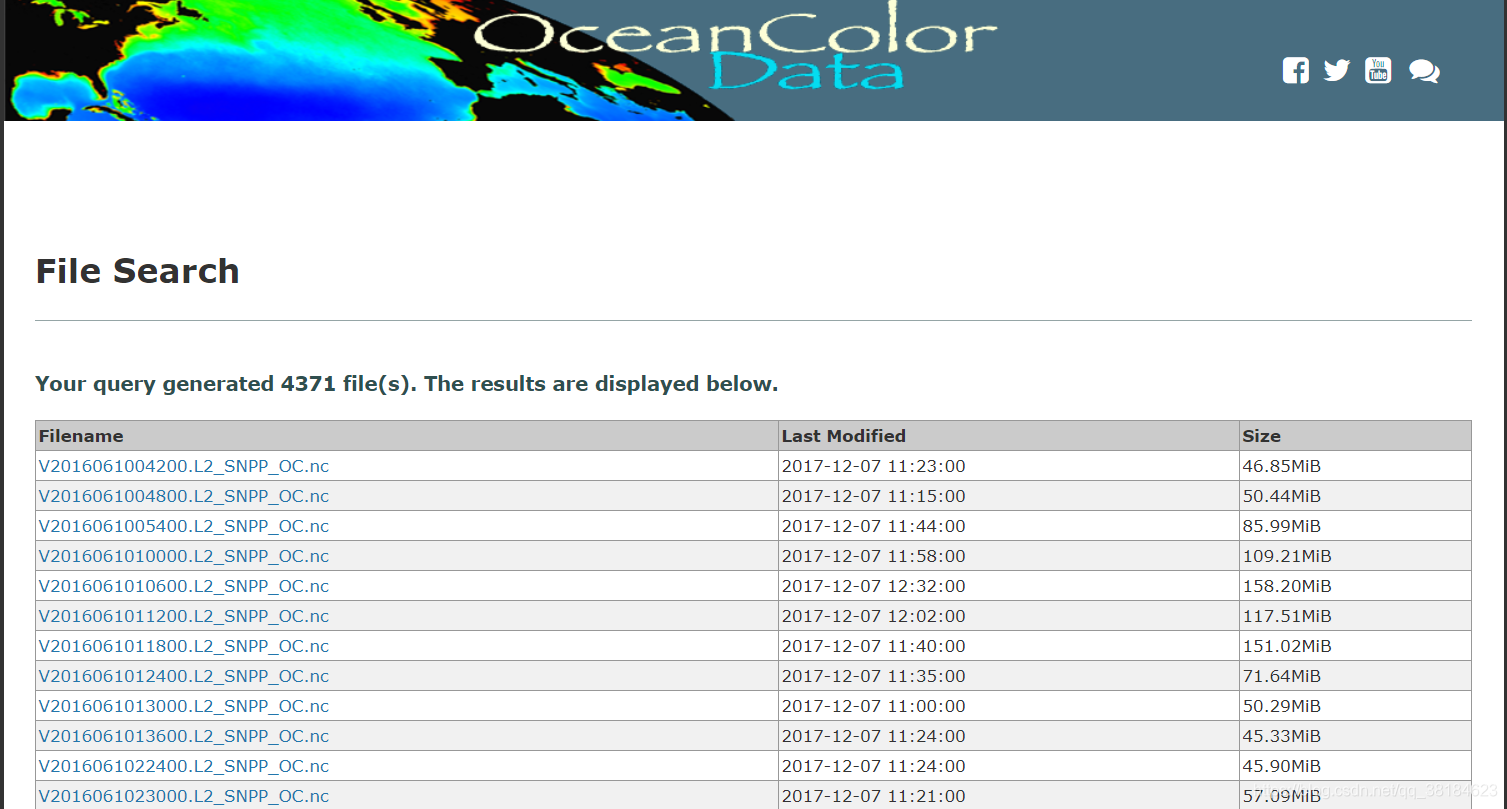

利用oceancolor的data file search,设时间段、传感器、产品级别,类型,获取如下图所示的数据列表,直接使用DownThemall,通过筛选器获取后缀为.nc的数据文件,然后下载即可。

但是,对于L2数据,我没有找到限定区域的输入参数,所以,该方法可能更适合下载L3这种全球数据。

但是,对于L2数据,我没有找到限定区域的输入参数,所以,该方法可能更适合下载L3这种全球数据。

wget方法

为解决下载特定区域的L2数据,我尝试了wget。

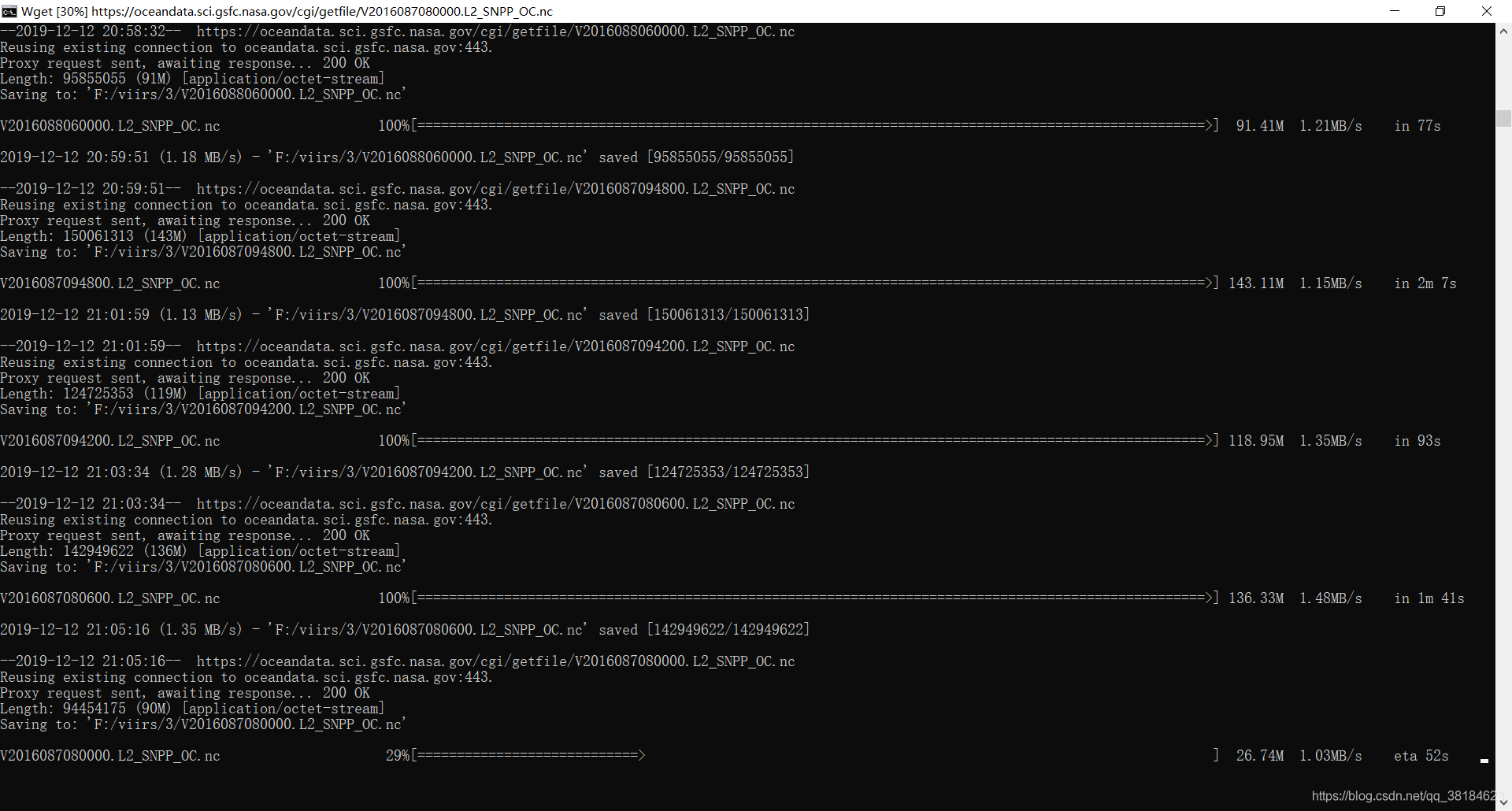

通过L1&L2 Browser指定时间段,传感器,海域名称或经纬度,点击搜索结果界面右上角的orderdata,生成订单,通过产生的URLs来批量下载。

wget的下载:wget for win

命令行使用代理

set http_proxy=http://127.0.0.1:1080

set https_proxy=http://127.0.0.1:1080

端口1080

curl https://www.网站.com

用curl测试,不用ping

wget --content-disposition -i manifest-file-name -P yourfilepath

wegt部分命令:

-P %指定下载目录;

-i URLs.txt %批量下载;

-t 20 %指定重试次数;

-T 120 %指定超时等待时间;

-c %断点续传(适合大文件);

-o download.log URL %输出下载日志;

-O %另命名;

详细的命令可参见他人的文章,有更详细的描述。

至此,我们就可以静静的看着下载进度条慢慢刷了。

谢谢阅读,才疏学浅,学艺不精,有不对的地方烦请各位批评指正。

谢谢阅读,才疏学浅,学艺不精,有不对的地方烦请各位批评指正。